Agnostic Rendering, hhcib?

Time to leave my comfort zone and explore something I've never done before. I'm usually focused on how to make cloud platforms more scalable, agnostic and interoperable, but I'm also really passionate about computer graphics. So in the computer graphics world, what is a daunting task that is hard to achieve but almost all modern game engines support in some way? Agnostic graphics backends for rendering. Some of the biggest studios can run their engines across all major platforms (Linux, Windows, Mac, Android and even on the Web) using a variety of backends from DirectX10/11/12 to WebGL, Vulkan and OpenGL. So before I dive right into the deep end; what is the absolute minimum useful thing I can create for myself?

| Windows | Mac | GNU/Linux | WebGL |

|---|---|---|---|

|  |  |  |

For context, here's an example of Battlefield 1 switching Graphics APIs between DirectX11 and DirectX12 (which requires a restart).

Battlefield 4 with Mantle support. Mantle was the predecessor to the Vulkan Graphics API.

A minimal viable "product"

Over the last few weeks I've been dabbling in how engines handle multiple platforms and graphics APIs. The first few steps seem to be straight forward and can be split into these tasks:

- Create a platform agnostic application

- Instantiate a window for any platform

- Rendering pipeline for each graphics api

So this is what the deep end looks like - these steps are what I'm going to be tackling here in order to get an understanding of the complexities around the tooling, Platform and Graphics APIs.

This isn't inteded to be a tutorial of any kind, I'm just messing about here stretching the ol' brain muscle. But if you're interested; the source for this project will be available here: https://github.com/heyayayron/eleven (opens in a new tab).

Step 1 - Create a platform agnostic application

Thankfully, i didn't actually have to google the first bit, I know of an abstraction library that can handle this part; GLFW to the rescue! But rest assured there will be lots of Stack Overlow to come (pun intended).

Under the hood of GLFW it functions in a similar way as SDL or SMFL, you pass it some configuration and then the underlying platform API calls are made via an abstraction layer (in our case GLFW - more specifically glfwCreateWindow).

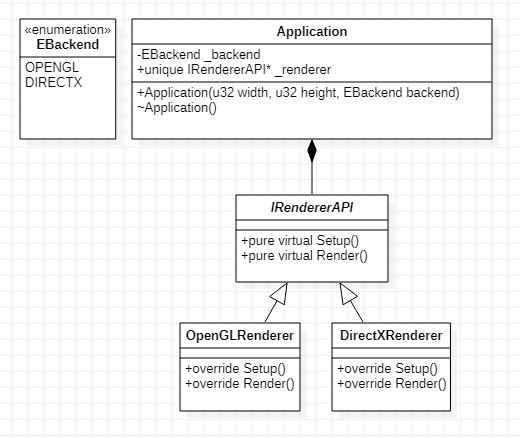

Project architecture

Solution Structure

src/

Renderer/

DirectXRenderer.cpp

DirectXRenderer.h

IRendererAPI.h

OpenGLRenderer.cpp

OpenGLRenderer.h

Application.cpp

Application.h

Eleven.h

vendor/

dxsdk

glad

glfw

CMakeLists.txt

main.cppSo, we've included GLFW, created our classes and abstractions. Now we call the required setup methods of GLFW to create our window.

As I'm only focusing on two graphics APIs in this project (OpenGL and DirectX12), I'll keep window instantiation in their respective platform classes - this could be abstracted even further but I'm going to leave it here.

Step 2 - Instantiate a window for any platform

The Application class will act as a factory to create the platform specific window (retrieve a window handle if applicable) and then determine which rendering pipeline is to be created.

Application::Application(u32 width, u32 height, EBackend backend)

{

if (width == NULL) throw "width is null";

if (height == NULL) throw "height is null";

if (backend == NULL) throw "backend is null";

_width = width;

_height = height;

_backend = backend;

CreateRenderer();

}

void Application::CreateRenderer()

{

switch (_backend)

{

case EBackend::NONE:

std::cout << "Backend NONE(" << _backend << ") selected\n";

break;

case EBackend::OPENGL:

std::cout << "Backend OPENGL(" << _backend << ") selected\n";

_renderer = std::make_unique<OpenGLRenderer>(_width, _height);

break;

case EBackend::DIRECTX:

std::cout << "Backend DIRECTX(" << _backend << ") selected\n";

_renderer = std::make_unique<DirectXRenderer>(_width, _height);

break;

}

}So GLFW needs to know what graphics API to use for window creation, this is another reason why we're keeping window and graphics context creation inside their respective classes.

OpenGLRenderer window

We call glfwCreateWindow and pass the width, height and title, this returns a GLFWwindow pointer that we store as _oglContext

Then we call glfwShowWindow(_oglContext) and wham! we've got a window.

DirectXRenderer window

For anything other than OpenGL contexts GLFW needs the following window hints:

glfwWindowHint(GLFW_CLIENT_API, GLFW_NO_API);

glfwWindowHint(GLFW_CONTEXT_CREATION_API, GLFW_NATIVE_CONTEXT_API);This tells the factory for GLFWs window instantiation to skip creating a graphics API context and instead allow us to return a handle for the window so we can inject our own graphics API context.

Then we can call glfwCreateWindow, glfwGetWin32Window and GetDC to return our HWND and deviceContexts ready for DirectX.

Step 3 - OpenGL rendering pipeline

In OpenGLRenderer::Setup we create some vertex buffers, vertex & fragment shaders and compile them.

Calling functions glfwSwapBuffers(_oglContext); and glfwPollEvents(); are all that's needed to establish our render loop on the OpenGL side.

For DirectX, it's a bit more involved.

Step 3 - DirectX rendering pipeline

The architecture changes from DirectX11 to DirectX12 have focused on building an API that supports multi-threaded workloads by allowing the programmer to specify their own pipelines. The tradeoff for this flexibility is complexity.

DirectX12 requires the following stages in the pipeline to start drawing vertices:

- Create DX12 Device (D3D12CreateDevice)

- Command queue for the device (CreateCommandQueue)

- Set up the swap chain using our window handle (CreateSwapChainForHwnd)

- Associate the device with our GLFW window

- Create a descriptor heap (CreateDescriptorHeap)

- Create our render target view aka RTV (CreateRenderTargetView)

- Command allocator (CreateCommandAllocator)

If you've done any programming using the Vulkan graphics API a lot of this will be familiar territory, we're essentially creating the entire CPU -> GPU processing pipeline with the ability to pack multiple command queues (jobs for the GPU) into a command allocator (buckets those jobs). It's worth noting that you can have multiple command queues AND multiple command allocators meaning you can work on multiple frames at the same time (CPU->GPU fencing permitting).

I'm creating Eleven::Vertex, Eleven::vec2 and Eleven::vec3 structs to be used across both backends, I should be able to pass vertex data into each backend which will result in rendering some triangles. Dynamically switching backends should produce the same result.

const struct Vertex

{

vec2 pos;

vec3 colour;

Vertex() {}

Vertex(float x, float y, float r, float g, float b)

: pos( x, y ), colour( r, g, b ) {}

};We use this vertex struct in Application::Run, just before the call to Render()

Eleven::Vertex vertices[3] =

{

Eleven::Vertex(-0.6f, -0.4f, 1.f, 0.f, 0.f ),

Eleven::Vertex( 0.6f, -0.4f, 0.f, 1.f, 0.f ),

Eleven::Vertex( 0.f, 0.6f, 0.f, 0.f, 1.f )

};

_renderer->Setup(vertices);Switching backends

This iteration of the project doesn't support dynamic switching of backends at runtime. So in order to change between them you must set the backend and re-compile.

Backend is specified in main.cpp here:

auto application = new Application(480, 320, EBackend::OPENGL);

// auto application = new Application(480, 320, EBackend::DIRECTX);Wrapping up part 1

So that's it for part 1, we've created our pipelines, windowing and abstracted some data-types for use in rendering. Bookmark the blog for part 2 where I will further explore dynamic switching between backends, abstracting vertex and index buffers to be able to draw shapes 1:1 between APIs. We'll also cover unified shaders at some point.